Multimedia Q&A refers to that given a natural image and a textual question about this image, the task aims to provide an accurate natural language answer. Its applications include assisting the visually impaired and providing human-computer interaction. In recent years, with the rapid development of deep learning technology, great progress has been made in the field of multimedia question answering based on deep neural networks.

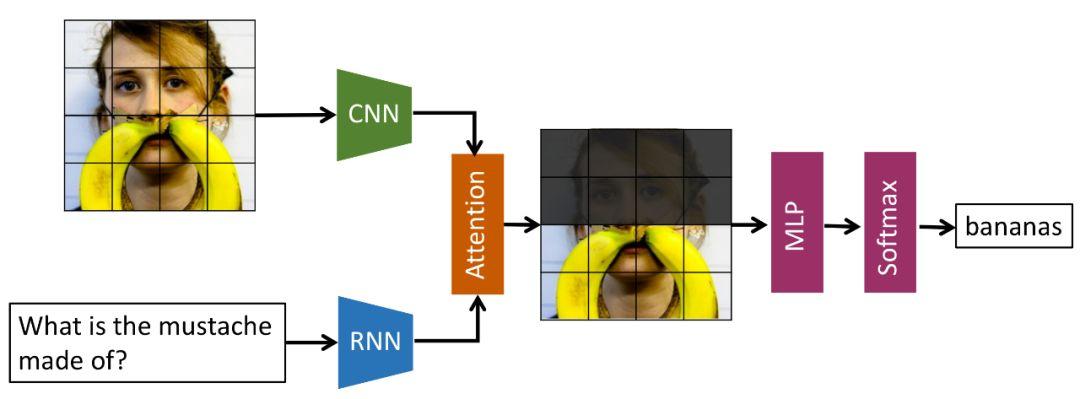

The general solution to multimedia Q&A is as follows: for the input image, pre-trained convolutional neural network is used to extract visual features; for question input, recurrent neural network is used to extract textual features; and then the two types of features are fused and interacted , classified to obtain the correct answer.

The current issues of interest in our group mainly include language prior problem and the use of external knowledge to assist in multimedia Q&A. Multimedia dialog can be understood as multiple rounds of multimedia Q&A, but for the later questions and answers are logically related to the previous questions and answers. Different from the traditional multimedia Q&A, the multimedia dialog needs to fully understand the history of question answering, and therefore requires the model to efficiently capture the relationship between sequences. Therefore, in addition to extracting the visual features in the image and the textual features in the question, we also need to add modeling and reasoning about historical question answering information.