In recent years, with the emergence and development of social media platforms and multimedia sensors, more and more data can be used to analyze social interactions between people. Social multimedia data has great potential to change the way people communicate, collaborate and communicate, and can even affect our understanding of life and society. At the same time, face-to-face social interactions recorded by multimedia sensors also provide a data foundation for analyzing and understanding social interactions between people.

1. Influencer recommendation based on multi-modal social media information

Social media has gradually evolved into mainstream media in recent years. At the same time, social media has had an important impact on business models. Ubiquitous social media makes it easy for businesses to reach and interact with users across social networks. Therefore, social networking is considered a very effective way of business marketing today. In this direction, we introduce the similarity of social media data and social network participation to jointly evaluate the competence between micro-influencers and brands, and recommend micro-influencers based on multimodal information. Related research work published in ACM MM 2019 (Seeking Micro-influencers for Brand Promotion Search and recommendation).

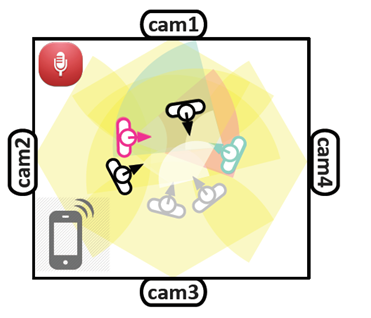

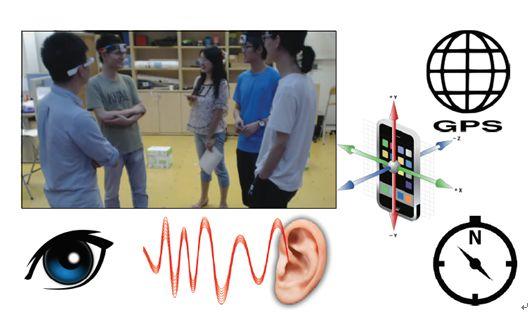

2. Body language analysis in social interaction based on multi-sensors

In the process of social interaction, body language is very important for information transmission. Gestures not only help to enrich the visual sense of its language content, help to convey emotions, but sometimes also convey information that cannot be expressed by words. In this direction, the social interaction behavior analysis based on speech is studied. With the help of sociological concepts of human behavior and communication, a multi-sensor-based speech behavior analysis system is proposed. In addition, further research is made on the method of automatically generating multimedia sensors for interpersonal interactive body language based on deep learning. It can use data analysis methods and artificial intelligence technology to provide speakers with more accurate body movement guidance, which can be deeply integrated with the content of the lecture. Better express the content of communication and improve the efficiency of interpersonal communication. Related research results published in ACM Multimedia 2015, ToMM, ACM Multimedia Asia 2019 (Multi-sensor self-quantification of presentations; A Multi-sensor Framework for Personal Presentation Analytics; Learn to