With a large amount of labeled training data, supervised learning achieves great success in many tasks, such as classification and regression. However, please note that we often get limited labeled training samples for some practical tasks, and it is not expensive to manually label these data. In this case, it is very necessary to study the weakly supervised learning. We mainly focus on unsupervised and semi-supervised learning, including both traditional machine learning methods and deep learning methods. Our recent progress lies in the following three aspects:

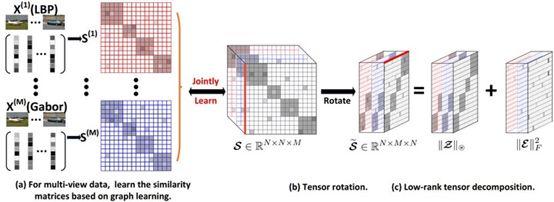

(1)Low-rank Tensor Learning based Multi-view Clustering

Compared with single view data, multi-view data can benefit the learning of comprehensive feature representation, which can improve the performance of unsupervised learning. Based on the affinity matrix computed for each view, we construct the 3-order tensor and learn the essential representation by the low-rank tensor constraint. It can explore the correlation among different views thoroughly.

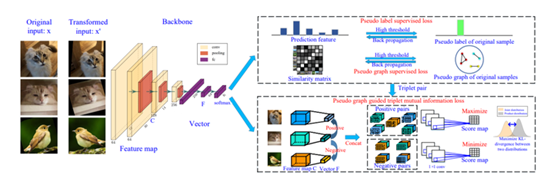

(2)Deep Comprehensive Correlation Mining for Image Clustering

Deep learning methods show very powerful ability for feature representation. Due to the lack of label supervision, it is important to find the deterministic correlation to supervise the network training. We propose the highly-confident pseudo-graph, pseudo-label, as well as the triplet mutual information to explore multiple correlations to guide the learning of neural network. We achieve superior performance on related datasets.

(3)Semi-supervised Learning for Classification

Semi-supervised learning is more close to practical situations, where we have a small portion of labeled samples. First, we need to make full use of the labeled data to train the network and learn discriminative features. Second, it is important to infer the semantic meaning of unlabeled samples as well as investigate the correlation among samples. Besides, we can design pretext task to train the network in a self-supervised way.